How to Make a Quick Broken Link Checker with Lynx Browser

Lynx is a text-only Web browser that is useful for quick SEO tasks, like checking text and links on a Web page. If you haven’t used it before, check out our basic Lynx tutorial and then come back here to see how to reuse that code to check a Web page for broken links.

By the end of this tutorial, you’ll have a new, custom program on your computer that you can run on any Web page by typing a command like this:

broken_link_checker https://example.com/Here’s a video that walks through the process:

A Quick Introduction to Shell Scripting

A shell script is a bunch of terminal commands that are put into a text file. When you execute the text file, the commands run. Shell scripts are useful for automating your computer when you need to run many commands at once.

Creating the File and Making It Executable

In order to execute the file as a program, you have to give the file executable permissions by running a command on it.

To create the file, open a terminal and create a new directory for your scraper using the mkdir (make directory) command:

mkdir lynx_scraperThen change into that directory with the cd (change directory) command:

cd lynx_scraperCreate a new file called broken_link_checker (no file extension) with the touch command:

touch broken_link_checkerThe next step is to make the file executable (+x), which you can do with the chmod command:

chmod +x broken_link_checkerAt that point, you’re ready to start writing the script. Open the file in your favorite code editor. If you’re using VS Code, you can probably type code . (or Code . on Windows) to launch VS Code in the current directory.

Writing the Script

Because the file doesn’t have a file extension, it needs a special line at the top of the file that tells the computer which programming language to use to execute the file. In this case, the language is bash.

#!/usr/bin/env bashTesting the Script

You can test your script by creating a variable named MESSAGE and printing out its value with an echo statement:

#!/usr/bin/env bash

MESSAGE="hello world"

echo "$MESSAGE"Save the file, and then run the program in the terminal like this:

./broken_link_checker(The leading dot and slash are important. The reason why there isn’t a file extension will become apparent later.)

The program should print out the text “hello world”.

Fetching the Links on a Web Page

In the last Lynx tutorial, we created a reusable function that extracts all the links on a Web page:

extract_links () {

lynx --listonly \

--nonumbers \

--display_charset=utf-8 \

--dump "$1" \

| grep "^http" \

| sort \

| uniq

}For simplicity’s sake, we’ll copy part of that code into our new script.

The broken_link_checker file should be updated so that it looks like this:

#!/usr/bin/env bash

# Assign the output of the `lynx` command to a variable named `URLS`.

URLS=$(lynx \

--listonly \

--nonumbers \

--display_charset=utf-8 \

--dump "$1" \

| grep "^http" \

| sort \

| uniq)

# Print out the contents of the `URLS` variable.

echo $URLSThe $() syntax is called command substitution. It replaces the command with the value of its output.

As in the Bash function, the $1 will be replaced by whatever you pass into the script.

So if you run the program like this:

./broken_link_checker https://example.com/then the $1 will be replaced by https://example.com/. The $1 refers to the first thing that comes after the name of the program.

Try running the code on my scraper testing site like this:

./broken_link_checker https://scrape-target.netlify.app/You should see a bunch of space-separated URLs as the output, something like this:

Those are the links that were found on the page that you specified.

Checking the HTTP Status Codes

The next step is to check each of those links for a 200 OK HTTP status code. The program will let us know if any of the URLs send a different status code (like 404 for a broken link).

Our script will perform these tasks:

- Loop over each URL.

- Fetch the URL with a free program called

curl. - Print out the HTTP status code.

- Save the output to a file.

Before adding the loop, it’s a good idea to try the curl command that will go in the loop.

Try running this command in the terminal to see what it does:

curl -o /dev/null -s -w "%{http_code}" https://scrape-target.netlify.app/It should print out the number 200, which is the HTTP status code from that Web page. It means that the page was found and the server believes that it was sent correctly.

Here’s how that command works:

curl— This is the name of the program we’re running.-o /dev/null— This send most of the response into the void in order to prevent it from showing up in the output.-s— This turns on “silent” mode to keepcurlfrom printing out a progress bar.-w "%{http_code}"— This tellscurlto print out the HTTP status code, which is the data we want from the given URL.https://scrape-target.netlify.app/— This is the URL to scan.

Looping Over the URLs

The next step is to turn the chunk of space-separated URLs into a Bash array that can be looped over. Here’s the command for that:

URLS_ARR=($URLS)Then we’re going to loop over that new URLS_ARR. The syntax for a loop looks like this:

# This weird syntax is how you loop over an array in Bash.

# The array in this case is named `URLS_ARR`.

for URL in "${URLS_ARR[@]}"; do

# The curl code will go here.

# We can access the current URL as $URL.

doneThe code to run the curl command is a little more complex. To print it out, we’ll use the echo command again, but that means that the curl command needs to be wrapped with $(). The URL will be passed in to the loop as $URL, and it’s wrapped with quotes in case there are spaces in the URL.

Here’s what that looks like:

echo $(curl -o /dev/null -s -w "%{http_code}" "$URL")To make the script more useful, we’ll also print out the URL after the status code, or we won’t be able to tell which URL the status code is for. The \t means “insert a TAB character”, and using \t requires us to add -e after the echo command.

(Don’t worry too much if you don’t understand everything right away. It often takes a few Google searches to remember all the syntax when writing scripts.)

So the final command for the interior of the loop looks like this:

echo -e $(curl -o /dev/null -s -w "%{http_code}" "$URL") "\t$URL"Putting it all together, the program should now look like this:

#!/usr/bin/env bash

URLS=$(lynx \

--listonly \

--nonumbers \

--display_charset=utf-8 \

--dump "$1" \

| grep "^http" \

| sort \

| uniq)

URLS_ARR=($URLS)

for URL in "${URLS_ARR[@]}"; do

echo -e $(curl -o /dev/null -s -w "%{http_code}" "$URL") "\t$URL"

doneRunning the Completed Broken Link Checker

You can now run the program by giving it the URL of the page that you want to check for broken links:

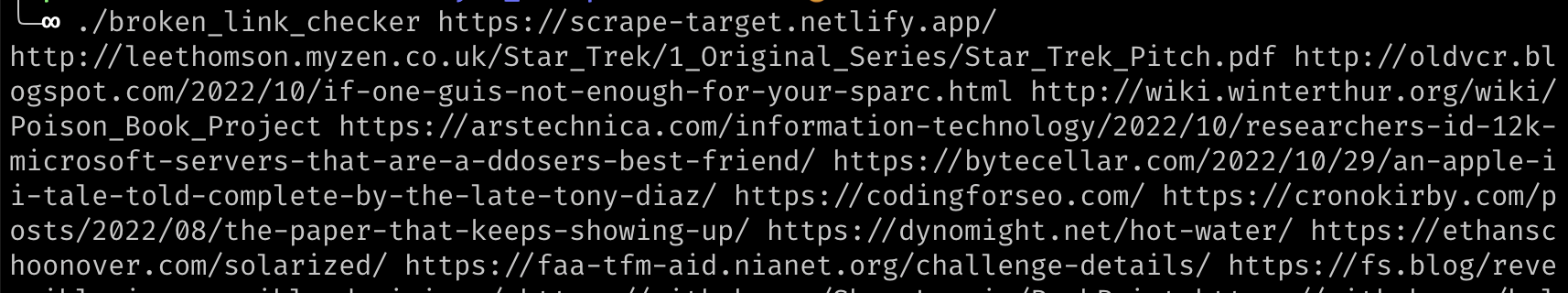

./broken_link_checker https://scrape-target.netlify.app/The output should look something like this, with the TAB character separating it into nice columns:

$ ./broken_link_checker https://scrape-target.netlify.app/

200 http://leethomson.myzen.co.uk/Star_Trek/1_Original_Series/Star_Trek_Pitch.pdf

200 http://oldvcr.blogspot.com/2022/10/if-one-guis-not-enough-for-your-sparc.html

200 http://wiki.winterthur.org/wiki/Poison_Book_Project

200 https://arstechnica.com/information-technology/2022/10/researchers-id-12k-microsoft-servers-that-are-a-ddosers-best-friend/

200 https://bytecellar.com/2022/10/29/an-apple-ii-tale-told-complete-by-the-late-tony-diaz/

200 https://codingforseo.com/If you want to save the output to a file, just add a > character and filename after the command like this:

./broken_link_checker https://scrape-target.netlify.app/ > broken_link_report.tsvUsing TABs to separate the columns lets us open the report in a spreadsheet program.

Why the Program Doesn’t Have a File Extension

If you want to run this script from anywhere, you can put it somewhere on your PATH. On my computer, I put it in a folder at ~/bin/ and added that folder to the PATH by adding this to my ~/.zshrc file:

export PATH="~/bin:$PATH"(Replace username with the username you use on your computer. If you use Bash by default, add that line to your ~/.bashrc file instead of your ~/.zshrc file.)

After the broken_link_checker script is on your PATH, you can run it with just the filename from anywhere on your computer like this:

broken_link_checker https://example.com/