Web Scraping in Node.js with Cheerio

Cheerio is a JavaScript library for parsing HTML and querying it using a syntax that is similar to jQuery.

How to Install Cheerio

Cheerio can be installed using npm, pnpm, or yarn.

If you don’t have an existing Node.js project ready, create an empty folder and run one of these commands in it, depending on whether you are going to use npm or yarn in the project (if unsure, choose npm):

npm initor:

yarn initor:

pnpm initThe command will ask you some basic questions about your new project.

Then install Cheerio with one of these commands, depending on whether you’re using npm or yarn:

npm install cheerioor:

yarn add cheerioor:

pnpm add cheerioHow to Do Web Scraping with Cheerio

In this first example, we’ll scrape a simple page that I deployed on Netlify.

Most of the content there is rendered as plain HTML, but some of it is rendered with JavaScript. With the method we’re using, Cheerio will only be able to see server-rendered HTML — not the client-rendered HTML.

The first step is to fetch the remote HTML. You can use the fetch function for that.

Create a file named first-scraper.mjs. (The .mjs extension will allow you to use ES6-style imports in Node.js. For details, see The Difference Between MJS, CJS, and JS Files in Node.js.)

Put this code in the file:

// We're going to use the `await` keyword, so this has to be an `async`

// function.

async function main() {

const url = "https://scrape-target.netlify.app/";

// Fetch the URL and wait for the response.

const response = await fetch(url);

// Extract the text of the response.

const html = await response.text();

// Print out the HTML to inspect it.

console.log(html);

}

// Run the function.

main();Run the program with this command, and it should print out the HTML source code of the page:

node first-scraper.mjsIf that works, you’re on the right track.

Next, edit your code so that it looks like this:

// Import the Cheerio library.

import * as cheerio from "cheerio";

async function main() {

const url = "https://scrape-target.netlify.app/";

const response = await fetch(url);

const html = await response.text();

// Load the HTML into cheerio so that it can be parsed. `$` is now a

// function that can take a CSS selector that targets the HTML elements on

// the page that you want to scrape.

const $ = cheerio.load(html);

// This selects all the elements that match the CSS selector `li a`. Then it

// loops over each item and prints out the title of the scraped link.

$("li a").each((idx, el) => {

// This wraps up `el` with Cheerio so that we can call the `.text()`

// method on it.

const cheeriofied = $(el);

// Generate the output.

const output = `${idx + 1}.\t${cheeriofied.text()}`;

// Print the output.

console.log(output);

});

}

main();Now run the program again, and it should print out the list of scraped items.

If you want to know why the CSS selector is "li a", take a look at the HTML source code on the target page.

<li>

<a target="_blank" class="article-link" href="https://github.com/susam/hello"

>A 23-byte “hello, world” program assembled with DEBUG.EXE in MS-DOS</a

>

</li>The a element that we’re trying to target is a child of li. So li a will return just those a elements.

Another way to target those links is to look at the class="article-link" part. We could be even more specific by changing this line:

$("li a").each((idx, el) => {to this:

$("li a.article-link").each((idx, el) => {That would ensure that only links with the class article-link would get scraped.

Take a closer look at the target page and try scraping some other parts of the page. Can you figure out how to scrape the paragraph text? How about the text in the <noscript> element?

Here are the Cheerio docs for reference.

Building a NY Times News Scraper

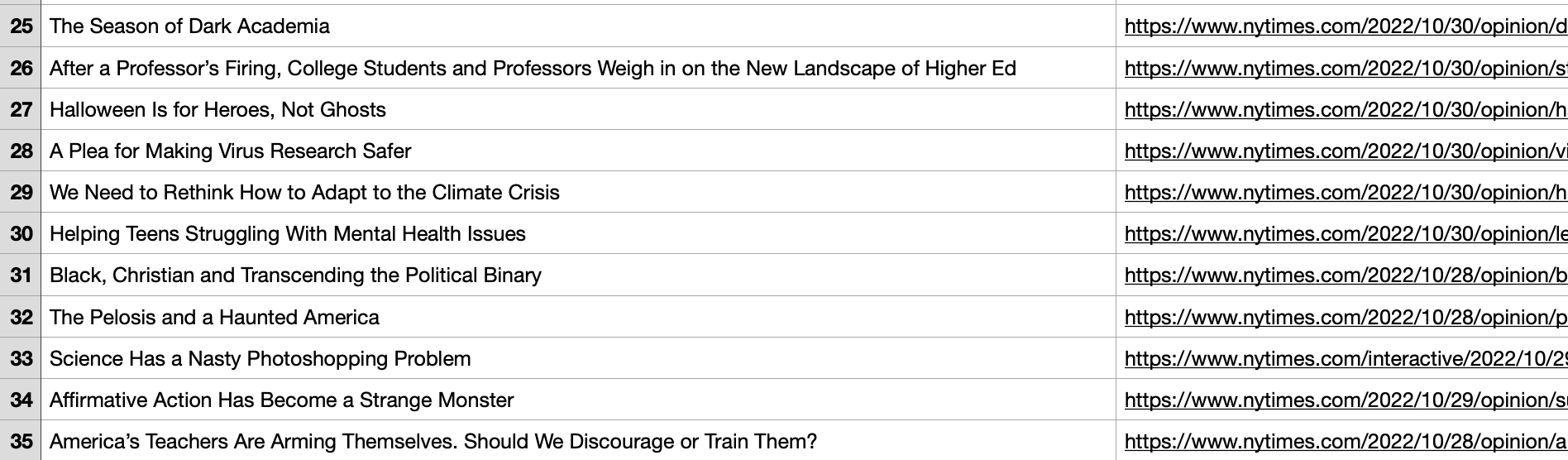

In this second example, we’ll create a scraper that grabs the latest headlines on the NY Times. This one uses selectors that are a little trickier. It also saves the output to a TSV file that can be opened in a spreadsheet program.

Create a file called news-scraper.mjs.

Read the code comments below to see how it works.

// `fs` is Node's library for working with files. We're going to save the output

// to a file, so we need to import it.

import fs from "fs";

import * as cheerio from "cheerio";

// This program will use the `await` keyword, so the function needs to be

// `async`.

async function main() {

// Define the URL to scrape and then fetch the HTML.

const url = "https://www.nytimes.com/";

const response = await fetch(url);

const html = await response.text();

// Load the HTML string into cheerio so that it can be queried.

const $ = cheerio.load(html);

// Then you can use jQuery-style CSS selectors to extract data from the

// HTML.

const headlines = $(".story-wrapper a");

// Create the header line for a tab-separated spreadsheet file. `\t`

// represents a TAB. There will be three columns in the spreadsheet: "#",

// "Title", and "URL".

const header = "#\tTitle\tURL";

// Each row of the spreadsheet will be pushed into the `lines` array. The

// `header` is the first row of the spreadsheet.

const lines = [header];

// Because `headlines` was created by Cheerio, it has extra methods on it.

// The `each` method will loop over each element returned by the `$()`

// command used earlier.

headlines.each((idx, el) => {

// Get the `href` attribute from the `<a>` element.

const link = $(el).attr("href");

// Get the text of the headline using `$()` with a CSS selector in it.

const title = $("h3.indicate-hover", el).text();

// Push the current headline's data into the `lines` array in three

// TAB-separated columns.

lines.push(`${idx + 1}\t${title}\t${link}`);

});

// Join the lines of the TAB-separated rows.

const output = lines.join("\n");

// This creates a new filename based on the current timestamp so you can see

// when the program ran, and also to prevent the script from overwriting old

// TSV files.

const filename = `${Date.now()}-nytimes-headlines.tsv`;

// Write the output to a TSV file.

fs.writeFileSync(filename, output);

console.log("wrote file", filename);

}

// Run the function.

main().then(() => console.log("finished"));Run the program like this:

node news-scraper.mjsHere’s part of the output of the program opened in a spreadsheet program:

If the NY Times changes their HTML in the future, the script will need updating. You can do that by right-clicking on a headline and choosing “Inspect”. Then look for the CSS selectors that you want to target. Put the CSS selector in the $() function. These are the two lines that should be changed:

const headlines = $("your css selector goes here");

// ...

const title = $("your other css selector goes here", el).text();If you have questions, leave a comment below.

Further Reading

If you found this post useful, you might also be interested in How to Use curl for Web Development and SEO.